| ID |

Date |

Author |

Group |

Subject |

|

1

|

28 Jan 2024, 13:50 |

Jan Gruenert | PRC | Issue | RC / BKR informs : Beam is down (since 12h10), all beamlines affected

The reason is failure of a quadrupole (power supply) downstream of the dogleg.

The MPS crew is working on recovery, access to XTL ongoing.

RC will inform when new estimates come in on when we can restart beam operation.

Currently affected on Photon side:

- SA1: Naresh (XPD) and Marco (DET) working on Gotthard-II commissioning. Naresh is present at BKR.

- SA2/SA3: Antje + Mikako (XRO) working on SA2 mirror flipping tests and SA3 exit slit check. Both are located at SXP control hutch.

Other XRO members will come in for the afternoon/evening shift work. Antje will inform them via chat. |

|

83

|

18 Apr 2024, 19:31 |

Jan Gruenert | PRC | Status | The intra-bunchtrain feedback (IBFB) was not active until today afternoon.

Although not strictly required or requested for the current week experiments,

it was decided to turn on this feedback, which should generally be active to

stabilize and improve the arrival time of the photon pulses along pulse trains.

In order to avoid complications during long data acquisitions, the PRC checked back with all ongoing experiments,

identified a suitable time slot, and the IBFB was activated without issues at aound 16h45. |

|

85

|

20 Apr 2024, 23:58 |

Jan Gruenert | PRC | SA2 status | 20.4.2024 / 22h51:

DOC informed PRC that the SASE2 topic broker had to be restarted.

The EPS power limit was reset by PRC to the previous value of 40 W.

DOC and DRC are working on the restart. HED is in hardware setup configuration change (not currently taking beam, thus no harm to experiment),

will start taking beam with new users sunday 7am, but would need before this some test runs for the detectors.

Currently the SA2 topic watchdogs (for ATT, CRL, imagers) cannot be restarted - they crash quickly after restarting.

DOC/DRC are investigating this issue. Until the watchdogs are successfully restarted, extra care must be taken when using ATT, CRL, imagers in SA2.

Update 21.4.2024 / 1h43 by DRC:

In a similar fashion to the incident of last 5.04, the Broker process for the SA2 and LA2 topic has restarted.

This was noticed by the DOC crew and by the HED crew in parallel.

With the help of the CTRL OCD (Micheal Smith) we restarted both topics. As a lesson learned from the last interruption, the POWER_LIMIT

middlelayer device has been set up by the PRC, so that when the DOOCS bridges will be restarted by the DESY-MCS personnel,

the maximum power will not be set to 0.

Please check in due time if all systems are working as intended.

|

|

173

|

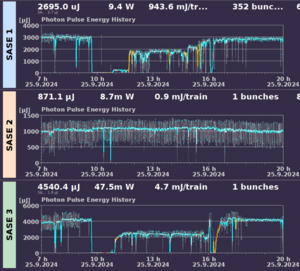

25 Sep 2024, 09:47 |

Jan Gruenert | PRC | Issue | Issue with SA3 Gas attenuator (SA3_XTD10_GATT)

9h06: SQS informs PRC that the Argon line in GATT is not reacting on pressure change commands, no GATT operation, which is required to reduce pulse energies for alignment. VAC-OCD is informed.

ZZ access is required to check hardware in the tunnel XTD10.

SA1 / SPB is working on other problems in their hutch (water leaks) and don't take beam.

SA3 / SQS will work with the optical laser.

SA2 / MID will not be affected: there is also an issue in the hutch and MID currently doesn't take beam into the hutch, and would anyhow only very shortly be affected during the moment of decoupling the beamline branches.

9h45: BKR decouples North Branch (SA1/SA3) to enable ZZ accesss for the VAC colleagues.

ZZ access of VAC colleagues.

10h12: The problem is solved. A manual intervention in the tunnel was indeed necessary. VAC colleagues are leaving the tunnel.

- Beam down SASE1: 9h43 until 10h45 (1 hour no beam), tuning done at 11h45, then 1.7mJ at 6keV single bunch.

- Beam down SASE3: 9h43 until 10h56 (1.25 hours no beam). Before intervention 4.2mJ average with 200 bunches, afterwards 2.5mJ at 400 eV / 3nm single bunch.

|

| Attachment 1: JG_2024-09-29_um_12.06.37.png

|

|

|

180

|

27 Sep 2024, 15:17 |

Jan Gruenert | PRC | Status | Bunch pattern SA1 / SA3

SQS requests to change the bunch pattern.

Currently SA1/SA3 run interleaved as 131313...

The first bunch in the train (for SA1, non-lasing in SA3) nevertheless is visible as a small pre-peak in the diode signal which SQS uses to check the timing.

Therefore SQS requests to change to 31313131....

It was checked with SA1 (SPB/SFX) and the accelerator experts that this should not cause any issues (AGIPD detector is running on dynamic trigger),

therefore it was agreed that SPB/SFX informs BKR when there is a good moment without data acquisition, and BKR will make this change after informing SQS.

This change will happen within the next hour (as of 15h20). |

|

185

|

29 Sep 2024, 11:42 |

Jan Gruenert | PRC | Issue | A2 failure - downtime

The machine / RC informs (11h22) that there is an issue with the accelerator module A2 (first module in L1, right after the injector).

This causes a downtime of the accelerator of 1 hour (from 10h51 to 11h51), affecting all photon beamlines. |

|

186

|

29 Sep 2024, 12:13 |

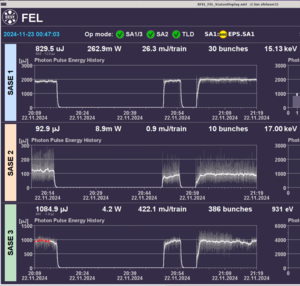

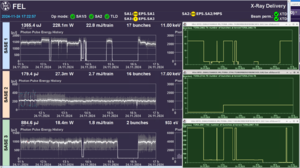

Jan Gruenert | PRC | Status | Beam is back. Current status:

SASE1 (SPB/SFX)

- 2.72 mJ, 352 bunches/train, 6 keV

SASE2 (MID)

- 880 uJ, 1 bunch/train, 8 keV

SASE3 (SQS)

- 4.56 mJ, 1 bunch/train, 400 eV (3.1nm)

|

| Attachment 1: JG_2024-09-29_um_12.12.28.png

|

|

|

201

|

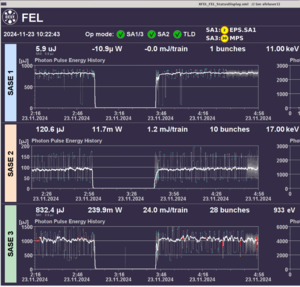

23 Nov 2024, 00:36 |

Jan Gruenert | PRC | Issue | 20h16: beam down in all beamlines due to a gun problem

20h50: x-ray beams are back

Shortly later additional 5 min down (20h55 - 21h00) for all beamlines, then the gun issue is solved.

After that, SA1 and SA3 are about back to same performance, but SA2 HXRSS is down from 130 uJ before to 100 uJ after the downtime.

Additionally, MID informs at 21h07 that no X-rays at all arrive to their hutch anymore. Investigation with PRC.

At 21h50, all issues are mostly settled, MID has beam, normal operation continues.

Further HXRSS tuning and preparation for limited range photon energy scanning will happen in the night. |

| Attachment 1: JG_2024-11-23_um_00.47.04.png

|

|

|

202

|

23 Nov 2024, 00:48 |

Jan Gruenert | PRC | Issue | Broken Bridges

Around 21h20 the XPD group notices that several karabo-to-DOOCS bridges are broken.

The immediate effect is that in DOOCS all XGMs in all beamlines show warnings

(because they don't receive important status informations from karabo anymore, for instance the insertion status of the graphite filters nrequired at this photon energy).

PRC contacts Tim Wilksen / DESY to repair these bridges after PRC check with DOC, who confirmed that on karabo side there were no issues.

Tim finds that ALL bridges are broken down. The root cause is a failing computer that hosts all these bridges, see his logbook entry;

https://ttfinfo.desy.de/XFELelog/show.jsp?dir=/2024/47/22.11_a&pos=2024-11-22T21:47:00

That also explains that HIREX data was no longer available in DOOCS for the accelerator team tuning SA2-HXRSS.

The issue was resolved by Tim and his colleagues at 21h56 and had persisted since 20h27 when that computer crashed (thus 1.5h downtime of the bridges).

Tim will organize with BKR to put a status indicator for this important computer hosting all bridges on a BKR diagnostic panel. |

|

204

|

23 Nov 2024, 10:23 |

Jan Gruenert | PRC | Issue | Broken Radiation Interlock

Beam was down for all beamlines due to a broken radiation interlock in the SPB/SFX experimental hutch.

The interlock was brocken accidently with the shutter open, see https://in.xfel.eu/elog/OPERATION-2024/203

Beam was down for 42 min (from 3h00 to 3h42) but the machine came back with same perfomrmance. |

| Attachment 1: JG_2024-11-23_um_10.22.43.png

|

|

|

205

|

23 Nov 2024, 10:27 |

Jan Gruenert | PRC | Issue | SA1 Beckhoff issue

SA1 blocked to max. 2 bunches and SA3 limited to max 29 bunches

At 9h46, SA1 goes down to single bunch. PRC is called because SA3 (SCS) cannot get the required >300 bunches anymore. (at 9h36 SA3 was limited to 29 bunches/train)

FXE, SCS, DOC are informed, DOC and PRC identify that this is a SA1 Beckhoff problem.

Many MDLs in SA1 are red across the board, e.g. SRA, FLT, Mirror motors. Actual hardware state cannot be known and no movement possible.

Therefore, EEE-OCD is called in. They check and identify out-of-order PLC crates of the SASE1 EPS and MOV loops.

Vacuum and VAC PLC seems unaffected.

EEE-OCD needs access to tunnel XTD2. It could be a fast access if it is only a blown fuse in an EPS crate.

This will however require to decouple the North branch, stop entire beam operation for SA1 and SA3.

Anyhow, SCS confirms to PRC that 29 bunches are not enough for them and FXE also cannot go on with only 1 bunch, effectively it is a downtime for both instruments.

Additional oddity: there is still one pulse per train delivered to SA1 / FXE, but there is no pulse energy in it ! XGM detects one bunch but with <10uJ.

Unclear why, PRC, FXE, and BKR looking into it until EEE will go into the tunnel.

In order not to ground the magnets in XTD2, a person from MPC / DESY has to accompany the EEE-OCD person.

This is organized and they will meet at XHE3 to enter XTD2 from there.

VAC-OCD is also aware and checking their systems and on standby to accompany to the tunnel if required.

For the moment it looks that the SA1 vacuum system and its Beckhoff controls are ok and not affected.

11h22: SA3 beam delivery is stopped.

In preparation of the access the North branch is decoupled. SA2 is not affected, normal operation.

11h45 : Details on SA1 control status. Following (Beckhoff-related) devices are in ERROR:

- SA1_XTD2_VAC/SWITCH/SA1_PNACT_CLOSE and SA1_XTD2_VAC/SWITCH/SA1_PNACT_OPEN (this limits bunch numbers in SA1 and SA3 by EPS interlock)

- SA1_XTD2_MIRR-1/MOTOR/HMTX and HMTY and HMRY, but not HMRX and HMRZ

- SA1_XTD2_MIRR-2/MOTOR/* (all of them)

- SA1_XTD2_FLT/MOTOR/

- SA1_XTD2_IMGTR/SWITCH/*

- SA1_XTD2_PSLIT/TSENS/* but not SA1_XTD2_PSLIT/MOTOR/*

- more ...

12h03 Actual physical access to XTD2 has begun (team entering).

12h25: the EPS loop errors are resolved, FLT, SRA, IMGTR, PNACT all ok. Motors still in error.

12h27: the MOTORs are now also ok.

The root causes and failures will be described in detail by the EEE experts, here only in brief:

Two PLC crates lost communication to the PLC system. Fuses were ok. These crates had to be power cycled locally.

Now the communication is re-established, and the devices on EPS as well as MOV loop have recovered and are out of ERROR.

12h35: PRC and DOC checked the previously affected SA1 devices and all looks good, team is leaving the tunnel.

13h00: Another / still a problem: FLT motor issues (related to EPS). This component is now blocking EPS. EEE and device experts working on it in the control system. They find that they again need to go to the tunnel.

13h40 EEE-OCD is at the SA1 XTD2 rack 1.01 and it smells burnt. Checking fuses and power supplies of the Beckhoff crates.

14h: The SRA motors and FLT are both depending on this Beckhoff control.

EEE decides to remove the defective Beckhoff motor terminal because voltage is still delivered and there is the danger that it will start burning.

We proceed with the help of the colleagues in the tunnel and the device expert to manually move the FLT manipulator to the graphite position,

and looking at the operation plan photon energies it can stay there until the WMP.

At the same time we manually check the SRA slits, the motors are running ok. However, removing that Beckhoff terminal also disables the SRA slits.

It would require a spare controller from the labs, thus we decide in the interest of going back to operation to move on without being able to move the SRA slits.

14h22 Beam is back on. Immediately we test that SA3 can go to 100 bunches - OK. At 14h28 they go to 384 bunches. OK. Handover to SCS team.

14h30 Realignment of SA1 beam. When inserting IMGFEL to check if the SRA slits are clipping the beam or not, it is found after some tests with the beamline attenuators and the other scintillators that there is a damage spot on the YAG ! See next logbook entry and ATT#3. This is not on the graphite filter or on the attenuators or in the beam as we initially suspected.

The OCD colleagues from DESY-MPC and EEE are released.

The SRA slits are not clipping now. The beam is aligned to the handover position by RC/BKR. Beam handover to FXE team around 15h. |

| Attachment 1: JG_2024-11-23_um_12.06.35.png

|

|

| Attachment 2: JG_2024-11-23_um_12.10.23.png

|

|

| Attachment 3: JG_2024-11-23_um_14.33.06.png

|

|

|

206

|

23 Nov 2024, 15:11 |

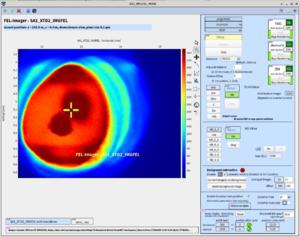

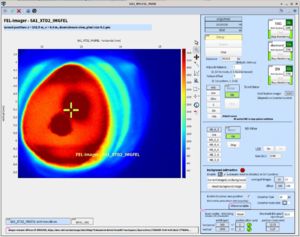

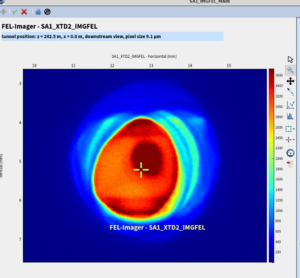

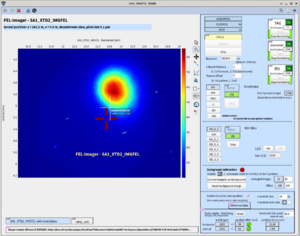

Jan Gruenert | PRC | Issue | Damage on YAG screen of SA1_XTD2_IMGFEL

A damage spot was detected during the restart of SA1 beam operation at 14h30.

To confirm that it is not something in the beam itself or a damage on another component (graphite filter, attenuators, ...), the scintillator is moved from YAG to another scintillator.

ATT#1: shows the damage spot on the YAG (lower left dark spot, the upper right dark spot is the actual beam). Several attenuators are inserted.

ATT#2: shows the YAG when it is just moved away a bit (while moving to another scintillator, but beam still on YAG): only the beam spot is now seen. Attenuators unchanged.

ATT#3: shows the situation with BN scintillator (and all attenuators removed).

XPD expert will be informed about this new detected damage in order to take care. |

| Attachment 1: JG_2024-11-23_um_14.33.06.png

|

|

| Attachment 2: JG_2024-11-23_um_14.40.23.png

|

|

| Attachment 3: JG_2024-11-23_um_15.14.33.png

|

|

|

207

|

24 Nov 2024, 13:47 |

Jan Gruenert | PRC | Issue | Beckhoff communication loss SA1-EPS

12h19: BKR informs PRC about a limitation to 30 bunches in total for the north branch.

The property "safety_CRL_SA1" is lmiting in MPS.

DOC and EEE-OCD and PRC work on the issue.

Just before this, DOC had been called and informed EEE-OCD about a problem with the SA1-EPS loop (many devices red), which was very quickly resolved.

However, "safety_CRL_SA1" had remained limiting, and SA3 could only do 29 bunches but needed 384 bunches.

EEE-OCD together with PRC followed the procedure outlined in the radiation protection document

Procedures: Troubleshooting SEPS interlocks

https://docs.xfel.eu/share/page/site/radiation-protection/document-details?nodeRef=workspace://SpacesStore/6d7374eb-f0e6-426d-a804-1cbc8a2cfddb

The device SA1_XTD2_CRL/DCTRL/ALL_LENSES_OUT was in OFF state although PRC and EEE confirmed that it should be ON, an aftermath of the EPS-loop communication loss.

EEE-OCD had to restart / reboot this device on the Beckhoff PLC level (no configuration changes) and then it loaded correctly the configuration values and came back as ON state.

This resolved the limitation in "safety_CRL_SA1".

All beamlines operating normal. Intervention ended around 13h30. |

|

208

|

24 Nov 2024, 14:09 |

Jan Gruenert | PRC | Issue | Beckhoff communication loss SA1-EPS (again)

14h00 Same issue as before, but now EEE cannot clear the problem anymore remotely.

An access is required immediately to repair the SA1-EPS loop to regain communication.

EEE-OCD and VAC-OCD are coming in for the access and repair.

PRC update at 16h30 on this issue

One person each from EEE-OCD and VAC-OCD and DESY-MPC shift crew made a ZZ access to XTD2 to resolve the imminent Beckhoff EPS-loop issue.

The communication coupler for the fiber to EtherCat connection of the EPS-loop was replaced in SA1 rack 1.01.

Also, a new motor power terminal (which had been smoky and removed yesterday) was inserted to close the loop for regaining redundance.

All fuses were checked. However, the functionality of the motor controller in the MOV loop could not yet be repaired, thus another ZZ access

after the end of this beam delivery (e.g. next tuesday) is required to make the SRA slits and FLT movable again. EEE-OCD will submit the request.

Moving FLT is not crucial now, and given the planned photon energies as shown in the operation plan, it can wait for repair until WMP.

Moving the SA1 SRA slits is also not crucially mandatory in this very moment (until tuesday), but it will be needed for further beam delivery in the next weeks.

Conclusions:

In total, this afternoon SA1+SA3 were about 3 hours limited to max. 30 bunches.

The ZZ-access lasted less than one hour, and SA1/3 thus didn't have any beam from 15h18 to 16h12 (54 min).

Beam operation SA1 and SA3 is now fully restored, SCS and FXE experiments are ongoing.

The SA1-EPS-loop communication failure is cured and should thus not come back again.

At 16h30, PRC and SCS made a test that 384 bunches can be delivered to SA3 / SCS. OK.

Big thanks to all colleagues who were involved to resolve this issue: EEE-OCD, VAC-OCD, DOC, BKR, RC, PRC, XPD, DESY-MPC

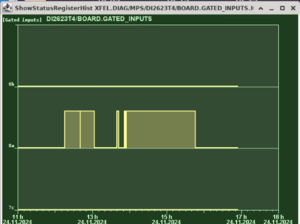

Timeline + statistics of safety_CRL_SA1 interlockings (see ATT#1)

(these are essentially the times during which SA1+SA3 together could not get more than 30 bunches)

12h16 interlock NOT OK

13h03 interlock OK

duration 47 min

13h39 interlock NOT OK

13h42 interlock OK

duration 3 min

13h51 interlock NOT OK

13h53 interlock OK

duration 2 min

13h42 interlock NOT OK

15h45 interlock OK

duration 2h 3min

In total, this afternoon SA1+SA3 were about 3 hours limited to max. 30 bunches.

The ZZ-access lasted less than one hour, and thus SA1/3 together didn't have any beam at all from 15h18 to 16h12 (54 min).

SA3 had beam with 29 or 30 pulses until start of ZZ, while SA1 already lost beam for good at 13h48 until 16h12 (2h 24 min).

SA3/SCS needed 386 pulses which they had until 11h55, but this afternoon they only got / took 386 pulses/train from 13h04-13h34, 13h42-13h47 and after the ZZ. |

| Attachment 1: JG_2024-11-24_um_16.54.02.png

|

|

|

209

|

24 Nov 2024, 17:23 |

Jan Gruenert | PRC | Status | Status

All beamlines in operation.

The attachment ATT#1 shows the beam delivery of this afternoon, with the pulse energies on the left and the number of bunches on the right.

SA2 was not affected, SA1 was essentially without beam from 13h45 to 16h15 (2.5h) and SA3 didn't get enough (386) pulses in about the same period.

Beam delivery to all experiments is ok since ~16h15, and SCS is just not taking more pulses for other (internal) reasons. |

| Attachment 1: JG_2024-11-24_um_17.22.58.png

|

|

|

214

|

04 Dec 2024, 15:34 |

Jan Gruenert | PRC | Status | Beam delivery Status well after tuning finished (which went faster than anticipated). |

| Attachment 1: JG_2024-12-04_um_15.33.09.png

|

|

|

216

|

05 Dec 2024, 07:38 |

Jan Gruenert | PRC | Issue | Accelerator module issue

7h20: BKR/RC inform that there is was issue with the A12 Klystron filament which had caused a short downtime (6h15-6h22) and was taken offbeam.

Beam was recovered in all beamlines, but the pulse energy came back lower especially in SA1 (before 920uJ, after about 500 uJ) and higher fluctuations.

All experiments were contacted to find a convenient time for the required 15-30min without beam to recover A12, and all agreed to perform this task immediately.

The intervention actually lasted only a few minutes (7h38-7h42) and restored all intensities to the original levels (averages over 5min):

- SA1 (18 keV 1 bunch): MEAN=943.8 uJ, SD=56.08 uJ

- SA2 (17 keV 128 bunches): MEAN=1071 uJ, SD=148.3 uJ

- SA3 (1 keV 410 bunches): MEAN=347.5 uJ, SD=78.06 uJ

The issue seems resolved at 7h42. |

| Attachment 1: JG_2024-12-05_um_07.30.17.png

|

|

|

218

|

05 Dec 2024, 15:58 |

Jan Gruenert | PRC | Issue | additional comment to MESSAGE ID: 217

PRC was informed by MID at 8h50 about a new appeared limiation to about 60 pulses / train (MID operated previously at 120 pulses/train).

It turned out that this limitation came from the protection of the SA2_XTD1_ATT attenuator, in partiicular the Si attenuator.

MID had not noticed this before probably due to the fact that after bringing back A12 online, there was a bit higher pulse energy for MID, and MID changed the attenuation settings.

MID could continue working with the full required 120 pulses / train by inserting more diamond attenuators in XTD1 instead of Silicon and using additional attenuators downstream. |

|

3

|

27 Jan 2024, 15:17 |

Idoia | XRO | Shift summary | SASE3

Open Issues:

- currently it is not possible to change the number of pulses because of Instruments beam permission interlock

Done:

- VSLIT was calibrated with diffraction fringes (it was off by 41 microns, the bottomo blade encoder was recalibrated to compensate this error)

https://in.xfel.eu/elog/XRO+Beam+Commissioning/319

- various setting points were checked at 20 mrad using mirror switch, no particular problems found

https://in.xfel.eu/elog/XRO+Beam+Commissioning/310

https://in.xfel.eu/elog/XRO+Beam+Commissioning/306

- ESLITS width was measured using diffracted beam. No Calibration was applied, we will discuss with instruments if this is needed/wished

https://in.xfel.eu/elog/XRO+Beam+Commissioning/323

https://in.xfel.eu/elog/XRO+Beam+Commissioning/324

https://in.xfel.eu/elog/XRO+Beam+Commissioning/325

- ATTENTION: SQS ans SXP ESLITS motors are now moving in the opposite direction as before (please look at the updated picture on the scene). Software limit switches are real limits: do not deactivate them

SCS ESLIT was already in this way: now all the ESLITs are configured similarly (no Elmo controllers anymore)

- SRA calibration was recalibrated

https://in.xfel.eu/elog/XRO+Beam+Commissioning/313

- FEL imager cross adjusted to GATT

https://in.xfel.eu/elog/XRO+Beam+Commissioning/307

SASE2

Open Issues

- HED Mono1 still not going through until HED popin. Paralleism of crystals achieved. Transmission is ok until Beam Monitor but not further.

Beam height was checked but the problem remained

Done:

- M3 mirror realigned, Euler motion roughly calibrated.

ATTENTION: Old saved values are no longer usable. "XRO setpoints" must be used as a starting point for further alignment

- Positions C and E were saved

- The fast flipping positions were almost found, see here: https://in.xfel.eu/elog/XRO+Beam+Commissioning/322

Unfortunately, beam was suddenly off and the procedure was not complete

- No particular problems found

SASE1

- FXE and SPB trajectory rechecked, no particular problems found

ATTENTION:

- M1 & M2 motors were found with wrong scaling factors (since some times). They are corrected while maintaining the mirror positions. (Most affected was M1 vertical motors which is rarely used).

- saved positions are using the "fast flipping option" meaning that you can switch between the two only using M2 RY

https://in.xfel.eu/elog/XRO+Beam+Commissioning/283

https://in.xfel.eu/elog/XRO+Beam+Commissioning/282

- CRL1 motor calibrations were lost, and restored from 6th Dec. In case if there are further issues, contact Control. |

|

130

|

23 Jul 2024, 17:17 |

Idoia | XRO | Shift summary |

- ZZ access at XTD10

- The LE PM X and HE PM X motors were checked and they are not physically moving. It was also noticed that the encoders numbers aren't changing. -> second ZZ was done and the problem was solved. (check entry https://in.xfel.eu/elog/XRO+Beam+Commissioning/412)

- The SQS exit slit was checked and the blades are moving but the macro to calculate the gap size isn't working. We left the blades in completely open state. The issue was solved, see entry https://in.xfel.eu/elog/XRO+Beam+Commissioning/401

- SA3, SQS alignment (with LE PM in compromised position)

- 20mrad LEPM Blank aligned, https://in.xfel.eu/elog/XRO+Beam+Commissioning/394

- 20mrad LEPM HR grating was aligned, https://in.xfel.eu/elog/XRO+Beam+Commissioning/395

- 13mrad LEPM blank was tested until M2-Pop-In (LE motor does not move to the 13 mrad setpoint), https://in.xfel.eu/elog/XRO+Beam+Commissioning/396

- Checking the components SA3

- SXP alignment

- Attempt to align @ 20 mrad, LE-PM, Blank (could not see reflection on M5 popin)

- LE-PM, blank reflection found on Mono-Popin, LE-PM motor position was changed, https://in.xfel.eu/elog/XRO+Beam+Commissioning/409

- SA2 alignment

- 2.1 mrad alignment to MID, then to HED ==> updated in the XRO setpoints "B" (2.1mrad B4C)

- Beam stability in this configuration recorded in Proposal 900450 run 1 (~ 5min), https://in.xfel.eu/elog/XRO+Beam+Commissioning/399

- SA2 PBLM grating

- Blade Right and Left were inserted at 2.1m rad condition, https://in.xfel.eu/elog/XRO+Beam+Commissioning/397

- no beam transmission ==> likely the grating hole is too low relative to the beam height,

- SA2 vibration tests

- SA1 beam aligned

- beam aligned for FXE and SPB, 2.3mrad offset at 9.3keV, https://in.xfel.eu/elog/XRO+Beam+Commissioning/404, https://in.xfel.eu/elog/XRO+Beam+Commissioning/408

|

|