| ID |

Date |

Author |

Group |

Subject |

|

369

|

27 Sep 2023, 07:24 |

Romain Letrun | SPB | Shift summary | Shift summary for 26.9.2023

X-ray delivery

- 6.3 keV, ~4 mJ, 1-352 pulses at 1.13 MHz

- Great performance and stable delivery with few minor interruptions

Optical laser delivery

Achievements/Observations

- Aligned MKB trajectory to 1.5m detector distance configuration

- Some work can still be done on the background

- Scattering laser setup

- A few runs with silver cubes taken at the end, with some time borrowed from FXE

- Good focus with MKB B4C strip

Issues

- Optical laser not available

- Historical data from some devices unavailable in Karabo. Solved by DOC

|

|

368

|

24 Sep 2023, 09:24 |

Peter Zalden | FXE | Shift summary | 7:00 Power glitch during SPB operation - they were supposed to hand the beam back at 7:30h

- Power glitch brought down FXE interlock system with beam stop monitor alarm that could not be reset

- SA1 PPL running fine, seems unaffected

9:10 Beam back in SA1, but still at 7 keV and cannot be changed by BKR to the req'd 5.8 keV, 94 kHz.

10:00 Beam back with FXE at req'd photon energy

Collected pump-probe diffraction and XAS data for the user expt. No further issues. |

|

367

|

24 Sep 2023, 09:16 |

Peter Zalden | FXE | Shift summary | Summary for sept., 23rd

6:55 SPB just handed the beam over to FXE when the power glitch killed the interlocks and brought down ACC

11:30 After a lot of communication with PRC, Wolfgang Clement and DOC, the beam is back in SA1. We request change to 5.5 keV

- It took most time to get the AIBS recovered

- Interlock was reset by Wolfgang Clement on-site

- Pandora X-05 in SXP hutch had no power, but recovered after TS restored power in SA3

12:30 We finished alignment and collected some user data (XRD)

16:00 Failure of the optical laser shutter in ILH. Seems not operational.

- Adhoc procedure is being established to circumvent the faulty laser shutter (coordinated with SLSO, LSO, GL and Management)

18:00 FXE is able to take user data again (XAS)

23:30 Beam handed over to SPB (thanks for the extra time to finish a promising measurement)

|

|

365

|

24 Sep 2023, 08:05 |

Jan Gruenert | PRC | Status | 8h05 Beam is switched back on in SASE2. HED ready for taking beam.

8h45 BKR is having problems to turn on SA2 beam. Not due to any

Beam actually went down in all beamlines today at 7:02 (for the records).

9h00: Electricity comes back to SASE3.

We can see SCS-XGM crate coming back on (that's how we can tell that power is back).

9h15 BKR struggles with SA2

9h20: Info from TS-OCD: Problem with USV at SASE3, all needs to be switched

9h30 BKR is working on making beam in SA1/3. SA1 beam on IMGFEL, switching to FXE conditions (5.6 keV).

9h08 FXE info:

D3 has reset the alarms for SA1 beamstop monitor 9.1 (burnthrough monitor) and SA3. FXE is ready to receive beam.

9h04 EEE-OCD informs:

Power has been restored to the LA3 PPL PLC

9h08 DOC info:

restoring settings for LA3 PPL as of before today's power cut.

|

|

366

|

24 Sep 2023, 07:49 |

Natalia, Justina, Giacomo, Sergey, Ben, Eugenio, Teguh | SCS | summary of 23.09 | Around 6:55 there was a power glitch. Power in the experimental hutch and rack room went down in SASE3, error message in Xray interlock system and no power for PP laser.

During morning TS turned the power on and interlock issues were solved by DESY. SCS could restart large part of SCS systems, however got help of DOC only after 2:30p.m. Similarily, PP laser was needed help of DOC and got this help only around 2p.m. The afternoon shift was mainly bringing the instrument in operation with helps of DOC. Around 9p.m. we realized that PP laser is not in operational state because of large fluctuations, this was not communicated to SCS at all. After calling the Laser group, SCS was told that the laser group will work on recover of the laser on Sunday morning. Without laser we could not continue user program, therefore at least 24h of user operation were lost.

|

|

364

|

24 Sep 2023, 07:28 |

Raphael de Wijn | SPB | Shift summary | X-ray delivery

- 7 keV 202 pulses 1.6mJ stabel across train and throughout the shift

- Beam down at 7am again, power glitch same as yesterday

Achievements/Observations

- faster changover and alignment

- YAG on nozzle worked

- collected data the droplets in oil sample delivery method - many good hits observed

- still working on the synchronisation with the x-ray pulses

|

|

363

|

24 Sep 2023, 07:19 |

Jan Gruenert | PRC | Attention | Again: power outage !

As yesterday, at around 7am, power was lost in Schendfeld at SASE3. No beam permission, accelerator in TLD mode.

SASE3 has no power, TS-OCD informed and on the way. BKR informs MKK to check cooling water pumps.

PRC is requesting all involved people of yesterday to take action acoording to what happened yesterday. |

|

362

|

23 Sep 2023, 23:31 |

Motoaki Nakatsutsumi | HED | Shift summary | During the morning shift (7am - 3pm), we couldn't take any meaningful data due to the issue of power glitch which caused the beam permission error (AIBS) and down of SASE2 Karabo / Online-GPFS due to the lack of cooling in the rack room.

During the late shift (3 pm - 11 pm), after these issues were solved thanks to PRC/DOC/ITDM/EEE at 5 pm..., we could get back in operation relatively quickly. Although there were a bunch of issues here and there (most of the devices needed to be instantiated and recover the correct parameters, loss of XGM, DAQ issue etc), Theo (XGM) and DOC helped us promptly.

Fortunately, the x-ray axis was not changed much. The beam was still exactly on the FEL imager cross. After the recovery of M2/M3 feedback (needed to recover the correct parameters which was a bit of time consuming though), the beam correctly went to the HED popin cross. The beam height at the sample location was about 30 um off in height. The temporal synchronization between the x-ray and laser was changed to about 4 ps compared to that of the morning.

We started taking data at ~ 9 pm. Since then, until the shift handover at 11pm, users could acquire data very smoothly. They will continue acquiring data with the night shift (11pm - 7 am) members.

|

|

361

|

23 Sep 2023, 15:35 |

Jan Gruenert | PRC | Issue | SASE2 :

The unavailability of karabo and possibly missing cooling water is a problem for the tunnel components as well.

XGM in XTD6 : server error since about 11am. We don't know about the status of this device anymore, neither from DOOCS or karabo.

VAC-OCD is informed and will work with EEE-PLC-OCD to check and secure SA2 tunnel systems possibly via PLC.

Main concerns: XGM and cryo-cooler systems. Overheating of racks which contain XGM and monochromator electronics.

16h00 SA2 karabo is back online (partially) !

Another good news:

together with VAC-OCD we see that the vacuum system of SA2 tunnel is fine, unaffected by the power cut and the karabo outage. Pressures seem fine.

Janusz Malka found for the SA3 GPFS servers that the balcony room redundancy cooling water didn't start, and no failure was reported.

16h25 DOC info

Cooling in rack rooms ok. GPFS servers are up, ITDM still checking. DAQ servers up.

16h30 XTD6-XGM is down.

Vacuum ok but MTCA crate cannot be reached / all RS232 connections not responding. XPD and Fini checking

but looks like an access is required. Raimund Kammering also checking crate.

16h30 FXE:

Optical laser shutter in error state. LAS-OCD informed. Therefore FXE is not taking beam now.

Actually the optical laser safety shutter between FXE optical laser hutch and experimental hutch has a problem and LAS_OCD cannot help. SRP to be contacted.

16h40 info ITDM:

recovery of SA2 GPFS hardware after cooling failure is completed. Everything seems to be working. Only few power supplies are damaged but there is redundancy.

16h40 info RC

SA2 beam ins now blocked by Big Brother since it receives an EPS remote power limit of 0 W. This info is now masked until all SA2 karabo devices will be back up. (e.g. bunchpattern-MDL is still down)

17h30 info by VAC-OCD

Vacuum systems SA1+SA2+SA3 are checked OK. Only some MDLs needed to be retstarted.

Some more problems with the cryo compressor on monochromators. 2 on HED and 1 on MID. Vacuum pressure is ok but if

users need the mono it will not be possible. VAC-OCD checks back with HED when they are back in the hutch. |

|

360

|

23 Sep 2023, 14:49 |

Jan Gruenert | PRC | Status | Update of power outage aftermath

Achieved by now / status

- SASE1

- beam delivery and FXE operating fine (beam back since about 11h30)

- SASE3

- SA3 getting beam since about 11h30, again difficulties / no beam between 12h30 - 13h34, currently ok.

- SASE3 Optical PP laser issues since the morning. DOC support was required by LAS-OCD (task completed by DOC at 14h30)

- LAS-OCD working on SA3 PP laser and estimate to have it operational around 16h.

- SASE2

- the inoperational MTCA crate for MPS was successfully restarted and AIBS alarms cancelled in PLC

- beam permission was recovered and XFEL is lasing in XTD1 until shutter

- karabo of SA2 is DOWN, DOC working on it (see below)

Main remaining issues:

- Complete restart of karabo for SASE2 is required. This is a long operation, duration several hours. DOC working on it.

- SASE2 Online-GPFS is down, probably due to lack of cooling. Possible hardware damage, ITDM assessing and working on it.

- SASE3 optical PP laser to be brought back

|

|

359

|

23 Sep 2023, 08:50 |

Jan Gruenert | PRC | Issue | Updates on power glitch

8h50 No feedback from TS-OCD (still not arrived at SASE3 / SCS ?). Phone is busy. Apparently they are busy working on it in the hall (confirmed at 9h00).

There is a PANDORA radiation monitor which has no power, but it is needed to resolve the interlock issue in FXE + SCS

Important:

regular SCS phone cannot be reached anymore (no power). Please use this number instead to call SCS hutch: 86448

Update 9h15

- SXP/SQS informed by email, SQS informed / confirmed by phone (Michael Meyer), they will check their hutch and equipment. No phone contact to SXP.

- FXE info: power back at PANDORA but interlock error persists

Beam is back to SA2 / XTD6 since 7h35.

9h20 Info by TS-OCD / Christian Holz: power at SASE3 area in hall is now back.

9h25 FXE:

The affected Pandora without power was PANDORA X05 in the SASE3 SXP hutch.

That PANDORA now has power back, but the FXE hutch interlock error is still persisting.

D3 Wolfgang Clement is informed and said he might have to come in to take care of the alarm.

9h40 info RC:

"new" problem, now SA2 : Balcony MPS crate SA1 balcony room , communication lost to "DIXHEXPS1.3 server - MPS condenser"

09:55 info FXE:

Wolfgang Clement was at FXE and has reset "all the burnthrough monitors", in total 5 units, "including also SXP and SPB optics". At least at FXE the X-ray interlock is now fully functional, confirmed.

10:05 Info SCS:

their interlock panel this shows an error, but which probably just has to be acknowledged. FXE / SCS will communicate and solve it.

10h35 BKR info:

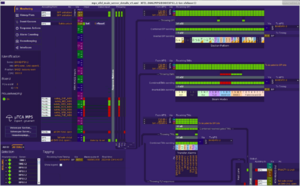

AIBS in all hutches of SA1/3 are blocking beam. Also DIXHEXPS1.3 is blocking beam to SA2. see att#1 and att#2.

11h10

EEE-OCD is working on AIBS / MPS from PLC/karabo side.

11h25 EEE-OCD:

PLC errors cleared for all AIBS.

11h30

beam to SA1/3 should be possible now. SA2 still blocked. The MTCA crate apparently needs to be power cycled.

EEE-Fast Electronics OCD is infomed through DOC.

12h00

EEE-PLC-OCD has succeeded to clear errors on the karabo/PLC side of AIBS. Now beam to SA1/3 is re-established.

However, the MTCA crates XFELcpuDIXHEXPS2 (and XFELcpuSYNCPPL7) might have power but are not operational (MCH unreachable) and must be locally power-cycled.

These are "DESY-operated" crates, not "EuXFEL-MTCA-crates".

Nevertheless, DOC staff with online instruction by EEE-FE will go to the balcony room and reboot crates locally as required/requested by RC. Hopefully this will bring us back beam permission to SA2.

12h10

Another new issue: SA2 karabo is down. DOC is working on this. No control possible of anything in SASE2 tunnel via karabo.

PRC instructs BKR to close the shutter between XTD2 and XTD6 (as agreed also with HED) for safety reasons, so that the beam won't be uncontrolled once SA2 gets beam permission back.

12h45 info from SCS:

a) the SCS pump-probe laser is down since the morning (and they absolutely need this for the experiment), they are in contact with LAS-OCD, but LAS-OCD waits DOC support to recover motor positions etc.

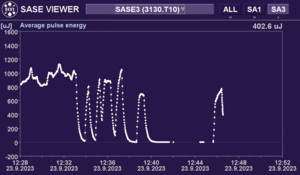

b) SCS received XFEL beam at 11h30 but since 12h33 the beam is intermittently interrupted (see att#3) or completely off. BKR informed but interruptions not yet understood.

12h50

The crate XFELcpuDIXHEXPS2 appeared to be revived. It reads OK on the DOOCS panel Controls--> MTCA crates --> XHEXP, but after some moments again in error (device offline).

Info RC: Tim Wilksen / DESY and team are working on this from remote and might come in if broken hardware needs to be exchanged.

13h40

SCS had reported 12h45 that beam is always set to zero. SCS and PRC investigated but couldn't find any EPS / karabo item that would do this.

We suspect that some karabo macro is asking to set the beam to 0 bunches but cannot find anything.

We then disabled the user control of the number of bunches from BKR. Now SCS receives beam but has to call BKR if they want to change the number of bunches. To be followed up by DOC once they have time.

14h20

I see now that the crate suddenly is OK, which probably means that it was finally cured by somebody. Beam permission to SA2 is back.

14h40

Beam is back in XTD1 (SA2) until the shutter XTD1/XTD6 and checked. Currently 300uJ.

Beam monitoring without karabo is possible in DOOCS with XGM (unless vaccuum problems would come up) and the Transmissive Imager.

Now the HED shift team is leaving until DOC has recovered karabo. Info will be circulated to HED@xfel.eu when this is achieved.

|

| Attachment 1: JG_2023-09-23_um_10.28.37.png

|

|

| Attachment 2: JG_2023-09-23_um_10.43.59.png

|

|

| Attachment 3: JG_2023-09-23_um_12.46.35.png

|

|

|

358

|

23 Sep 2023, 08:14 |

Peter Zalden | FXE | Issue | FXE interlock in fault state. See photo attached with original error message from 6:53h. It cannot be reset by acknowledging. BKR is informed and they are contacting MPS OCD. |

| Attachment 1: 20230923_074956.jpg

|

|

|

357

|

23 Sep 2023, 07:59 |

Oliver Humphries | HED | Shift summary | Shift summary from Monday 18th 7am until Saturday 7am

MONDAY

- Aligned beamline until the beamstop. Macro for recovering mirror positions worked well. Minimal fine tuning required.

- Changed energy to 8.4 keV to align spectrometes on Ni kalpha emission.

- Characterized focal spot of the x-rays at interaction point via edge scans. Focus of ~4 um as requested by users.

- Aligned all laser imaging systems to the interaction point

TUESDAY

- Verified the xfel photon energy with the HED spectrometers. The energy was off by 30 eV. Tuned the energy for real 7.9 keV, in the BKR screen appears as 7.87 keV

- Beamline transmission scans. Focusing CRLs in the optics hutch give 55% transmission. This means from the 700 uJ from XGM1 we get around 250uJ at the interaction point (accounting also for ~75% transmission through the mirrors)

- First ReLaX + X-ray test shots. Fine tuned some timings, filtering on the Streaked Optical Pyrometry, etc...

- Cancelled night shift, due to no X-rays on Wednesday. Usually at this point we would align targets over night and shoot them during the day.

THURSDAY

- Beam was not exactly at the same position at the XFEL imager as Monday. We needed to recheck the beamline alignment and overlap of all our beams at the interaction point. (We need to overlap in a 5x5 um2 beamspot.

- Rechecked the X-ray energy. This time it was 110 eV off. The BKR number is 7.79 keV for real 7.9 keV.

- Further checkes on spatial and timing overlap.

- Essentially, we could recover the conditions from Tuesday evening at Thursday late afternoon.

- Late evening: uTCA1 stopped working We switched the Mastertimer to uTCA2 waiting for an opportunity on Friday to solve it. We also observed an issue with an outdated trainId from the PPU being stored in the DAQ. We contacted DOC for both issues.

- Late evening, series of laser shots to estimate the optimal laser conditions for the experiment.

- First full data sequence collection.

FRIDAY

- We are in data collection mode. Aligning targets and shooting them.

SATURDAY

- Power glitch at 6:50 am on Saturday morning. HED is minimally affected, beam goes down

- Beam comes back after ~1hr, but down again 30 mins later due to MPS issue (only for SASE2) which requires a server "DIXHEXPS1.3" to be reset, which could not be located.

- Simultaneously SASE2 karabo broker has pile up issues resulting in extreme latency. DOC begins to work on the problem and the whole SASE 2 topic goes down, services do not reappear. We are informed that this may require a restart of the whole broker.

|

|

356

|

23 Sep 2023, 07:31 |

Jan Gruenert | PRC | Issue | 7:23: RC info:

an electrical power glitch interrupted beam operation, machine down. DESY has power back

and accelerator is recovering beam towards TLD, but no beam possible into TLDs, apparently power issues in Schenefeld.

7:32 SCS info:

SA1+SA2 have electricity, SA3 not. TS is informed and on the way. Power went away around 6h50.

Lights in hall remained on, but everything in SA3 is off, even regular power sockets. In karabo all red.

Hutch search system indicates FAULT state.

7:45 FXE info:

FXE was not affected by any power going away, all systems are operational, but of course no beam due to SA3.

The only thing unusual was an (fire ?) alarm audible at 6h53. FXE is ready and waiting for beam.

7:50 HED info:

all normal, just XFEL beam went away. RELAX laser not affected, all ok, waiting for beam.

7:55 FXE info:

Interlock fault lamp on FXE interlock panel is RED. Interlock cannot be set. FXE will contact SRP radiation protection.

8:00 RC :

MPS on-call is present at BKR. Machine will try to restart south branch, but there seems to be also an interlock by an XTD8 (sic!) shutter (?!).

08:03 FXE / SRP:

SRP informs, that they cannot help in this case but DESY MPS-OCD must solve this.

08:13 BKR:

BKR shift leader Dennis Haupt will try to contact MPS team.

Further updates in next entry https://in.xfel.eu/elog/OPERATION-2023/359 |

|

355

|

23 Sep 2023, 07:04 |

Adam Round | SPB | Shift end | Staff:JK, RDW, AR

X-ray delivery

- 7 keV 202 pulses 1.7mJ stabel across train and throughout the shift

Optical laser delivery

Achievements/Observations

- faster changover and alignment

- almost 6 hours of data aquisition - many good hits observed

- strong diffraction in Hits required transmission to be reduced to 34%

Issues

- maintaining synchronnisation for droplet overlap proved difficult effective hit rate of 0.3% observed

- hits observed in beats as timing passed though overlap quite often - some periods where we could see hits in nearly every train but this could not be maintained.

|

|

354

|

22 Sep 2023, 17:46 |

Jan Gruenert | PRC | Status | SASE3 gas supply alarm

SCS called the PRC at 17h04 due to a loud audible alarm in XHEXP1 and contacted also TS-OCD.

It turned out to be the gas supply system for SASE3, an electrical cabinet on the balcony level in the SE-corner of XHEXP1.

Actions taken by PRC:

a) contacted TS-OCD (Christian Holz, Alexander Kondraschew)

b) terminated the audible alarm

c) source for the alarm was: "Stroemungssensor -BF15.3+GSS3"

d) from this point on, TS is taking over

1) The instruments need to receive a number to call to reach the hall crew.

2) The alarm was likely triggered by the SCS team since there was a problem with the Krypton gas supply to their XGM around the same time. |

|

353

|

22 Sep 2023, 07:27 |

Raphael de Wijn | SPB | Shift summary | X-ray delivery

- 7 keV, 202 pulses, 564 kHz, ~1.3 mJ, stable delivery

Optical laser delivery

Achievements/Observations

- Collected a few NQO1 runs (no mixing) in Adaptive and FixedMG with the Ros setup, good diffraction

- Difficult to reach droplet synchronisation

|

|

352

|

22 Sep 2023, 06:51 |

Benjamin van Kuiken | SCS | Shift summary | Achievemnets

- Characterized the X-ray and laser danage on the STO2LNO2

Problems

- Cound not find fine timeing from XAS measurements performed on the sample

- MCP sees 266 nm PP laser

- SiN mesaurement not successful so far. It is possible that there is an issue with the SN membranes on the sample holder

Plan for next shift

- Find fine timing

- conclude damad sutdy of sample.

|

|

351

|

22 Sep 2023, 00:56 |

Jan Gruenert | PRC | SA1 status | As agreed beforehand, PRC and SPB/SFX re-assessed the SA1 EPS power limit based on actual performance, required number of pulses and rep rate, at the agreed SPB photon energy of 7 keV.

The actual lasing performance is on average 1.3 mJ / pulse at the current 7 keV.

Before the change, the assumed minimum pulse energy per requested bunch was set to 3.0 mJ in the Big Brother EPS power limit SA1, where we had set the remote power limit to 5 W.

To allow for the required 202 bunches at 0.5 MHz for SPB, I changed to an assumed minimum pulse energy per requested bunch of 2.2 mJ while maintaining the 5 W limit (rather than the inverse).

Maintaining in this way the 5 W limitation ensures that even if by human error during the shift change a high number of pulses would be requested at 5.5 keV, this could by no means damage the CRLs.

Additionally the SPB/SFX and the BKR operators were reminded about the safe procedure for the shift handover:

a) first the number of bunches has to be set to single bunch per train

b) then the wavelength could be changed

c) then the respective instrument would make sure that the beamline is in a safe state (e.g. CRL1 retracted) before a higher number of bunches would be requested (in particular at 5.5 keV). |

|

350

|

21 Sep 2023, 09:24 |

Jan Gruenert | PRC | SA1 status | Info by R.Kammering (mail 20.9.23 18h25), related to the SA1 intensity drops during large wavelength scans:

"We found some problematic part in the code of our wavelength control server.

After lengthy debugging session we (Lars F, Olaf H. and me) did find a workaround which seems to have fixed the problem.

But we are still not 100 % sure if this will work under all circumstances, but this was the best we could achieve for today.

So finally we have to see how it behaves with a real scan from your side and if needed follow up on this at a later point of time."

Together with the finding of the test by FXE this seems to have resolved the issue. |

|