| ID |

Date |

Author |

Group |

Subject |

|

38

|

28 Feb 2024, 06:57 |

Peter Zalden | FXE | Issue |

Not sure if this is the cause of the fringes reported before, but at 5.6 keV there are clear cracks visible on the XTD2 Attenuators 75 umm and 150um CVD, see attached. There is also a little bit of damage on the 600 um CVD, but not as strong as the others.

At 5.6 keV we will need to use the thin attenuators. I will mention again in case this causes problems downstream.

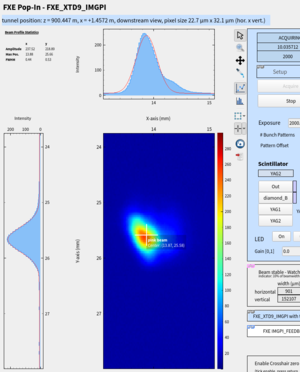

Edit 8:50h: We do not observe additional interference effects in the downstream beam profile (see attachement 4) |

| Attachment 1: 2024-02-28-064435_203161010_exflqr51474.png

|

|

| Attachment 2: 2024-02-28-064443_551842636_exflqr51474.png

|

|

| Attachment 3: 2024-02-28-064454_671377179_exflqr51474.png

|

|

| Attachment 4: 2024-02-28-085524_727294913_exflqr51474.png

|

|

|

46

|

04 Mar 2024, 09:42 |

Romain Letrun | SPB | Issue |

The number of bunches during the last night shift kept being changed down to 24 by the device SA1_XTD2_WATCHDOG/MDL/ATT1_MONITOR020 acting on SA1_XTD2_BUNCHPATTERN/MDL/CONFIGURATOR. This was not the case during the previous shifts, even though the pulse energy was comparable. Looking at the history of SA1_XTD2_WATCHDOG/MDL/ATT1_MONITOR020, it looks like this device was started at 16:26:25 on Friday, 1st March. |

| Attachment 1: xtd2_att_watchdog.png

|

|

|

50

|

15 Mar 2024, 00:07 |

Harald Sinn | PRC | Issue |

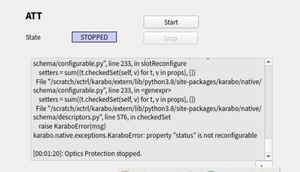

SASE1 bunch number was reduced to 10 pulses for no obvious reason.

Traced it back to a malfunctioning of the watchdog for the solid attenuator (see attached error message)

Deacttivated the watchdog for SASE1 solid attenuator. Re-activated it again.

Issue: SASE1 (SPB?) was requesting 200 pulses at 6 keV with full power with all solid attenuators in the beam. This was a potentailly dangerous situation for the solid attnenuator (!)

After that the team left to go home. Nobody is available at FXE. Set pulse number to 1.

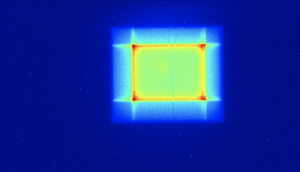

Checked status of solid attenuator. Seems that plate 1&2 have some vertical cracks (see attached picture)

|

| Attachment 1: watchdog_error.jpg

|

|

| Attachment 2: cracks_in_SA.jpg

|

|

|

52

|

15 Mar 2024, 07:08 |

Romain Letrun | SPB | Issue |

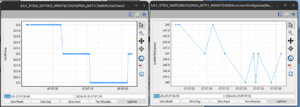

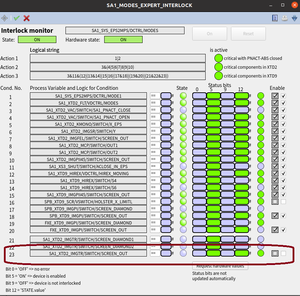

There are now two watchdogs for the XTD2 attenuator running at the same time

- SA1_XTD2_WATCHDOG/MDL/ATT1_MONITOR020

- SA1_XTD2_OPTICS_PROTECTION/MDL/ATT1

that are configured differently, which results in different pulse limits (see attachment). |

| Attachment 1: Screenshot_from_2024-03-15_07-07-40.png

|

|

|

55

|

16 Mar 2024, 12:12 |

Harald Sinn | PRC | Issue |

11:15 SASE1/SPB called that they observed and issue with the SASE1 XGM/XTD2: about every 35 seconds the power reading jumps from 4 Watts to zero and back. It seems to be related to some automated re-scaling of the Keithlyes, which coincides. The fast signal and downstream XGMs do not show this behavior. The concern is that this may affect the the data collection later today.

12:15 The XGM expert was informed via email, however, there is no regular on-call service today. If this cannot be fixed in the next few hours, the PRC advises to use in addition the reading of the downstream XGM for data normalisation.

13:15 Theo fixed the problem by disabling auto-range. Needs follow-up on Monday, but for this weekend should be ok. |

|

56

|

20 Mar 2024, 12:41 |

Frederik Wolff-Fabris | PRC | Issue |

BKR called PRC around 06:10AM to inform on vacuum issues at SA1 beamline leading to a lost beam permission for the North Branch.

VAC-OCD found an ion pump in the PBLM / XTD9 XGM presented a spike in pressure that triggered valves in the section to close.

After reopening valves the beam permission was restored; further investigations and monitoring in coming days will continue. Currently all systems work normally.

https://in.xfel.eu/elog/Vacuum-OCD/252 |

|

65

|

28 Mar 2024, 14:56 |

Chan Kim | SPB | Issue |

Pulse energy fluctuation observed after full train operation in SA2 starts. |

| Attachment 1: Screenshot-from-2024-03-28-14-26-18_1.png

|

|

|

67

|

30 Mar 2024, 06:59 |

Romain Letrun | SPB | Issue |

DOOCS panels are again incorrectly reporting the FXE shutter as opened when it is in fact closed. This had been fixed after it was last reported in February (elog:24), but reappeared now. |

| Attachment 1: Screenshot_from_2024-03-30_06-58-42.png

|

|

|

69

|

30 Mar 2024, 22:02 |

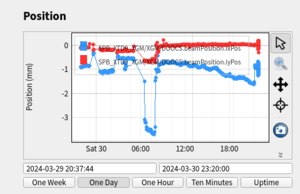

Chan Kim | SPB | Issue |

Continuous pointing drift in horizontal direction.

It may trigger a vacuum spike at SPB OH (MKB_PSLIT section) at 21:07 pm.

|

| Attachment 1: Screenshot_from_2024-03-30_21-59-03.png

|

|

| Attachment 2: Screenshot_from_2024-03-30_21-09-11.png

|

|

|

91

|

01 May 2024, 16:39 |

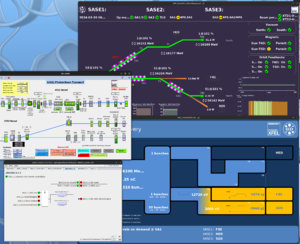

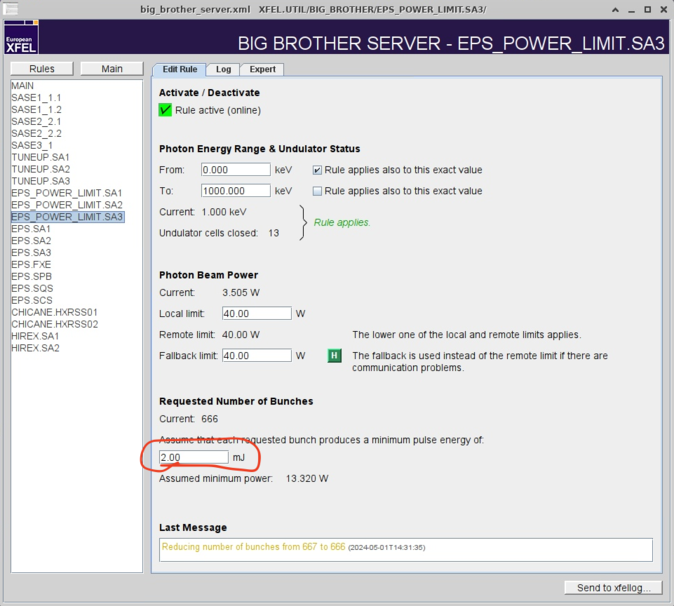

Andreas Galler | PRC | Issue |

840 pulses not possible at SXP due to big brother settings for the EPS Power Limit rule. The minimum pulse energy was assumed to be 6 mJ while the actual pulse XGM reading is 550 uJ.

Solution: The minimum assuemd pulse energy had been lowered from 6 mJ to 2 mJ. This shall be reverted on Tuesday next week.

|

|

96

|

08 May 2024, 13:51 |

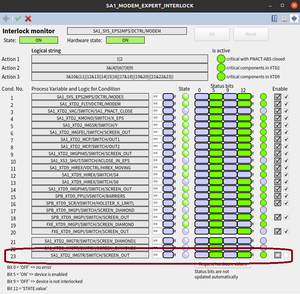

Raśl Villanueva-Guerrero | PRC | Issue |

Dear all,

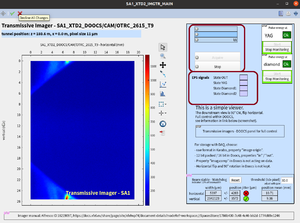

Due to a probable issue at the SA1 Transmissive imager, the "OUT" and "YAG" position indication signals seems to be not responsive.

After the FXE team noticed that MODE S was interlocked, the root cause has been narrowed down to this component. Later, further investigation with A. Koch & J. Gruenert (XPD) has confirmed this fact. Apparently to recabling works performed during yesterday's ZZ may have something to do with this issue.

Once that the complete retraction of all the screen has been jointly confirmed via DOOCS and Karabo, the affected interlock condition (see attached picture) has been disabled for the rest of the week to allow normal operation of SA1 (release of MODE S and MODE M).

Further investigation and the eventual issue resolution is expected to happen on Tuesday next week via ZZ-access.

With best regards,

Raul [PRC@CW19] |

| Attachment 1: image_2024-05-08_135756593.png

|

|

| Attachment 2: image_2024-05-08_140027070.png

|

|

| Attachment 3: image_2024-05-08_140101131.png

|

|

| Attachment 4: image_2024-05-08_140228955.png

|

|

|

99

|

10 May 2024, 00:04 |

Rebecca Boll | SQS | Issue |

our pp laser experienced timing jumps by 18 ns today. this happened for the first time at ~7:30 in the morning without us noticing and a couple of more times during the morning. we realized it at 15:30 due to the fact that the signal on our pulse arrival monitor (X-ray/laser cross correlator downstream of the experiment) disappeared. we spent some time to investigate it, until we also see this jump in two different photodiodes different triggers (oscilloscope and digitizer) so it was clear that it is indeed the laser timing jumping. we also see the laser spectrum and intensity change for the trains that are offset in timing.

we called laser OCD at ~16:30. they have been trying to fix the problem by readjusting the instability zone multiple times and by adjusting the pockels cell, but this did not change the timing jumps. at 21:30 they concluded that there was nothing more they could do tonight and a meeting should happen in the morning to discuss how one can proceed.

effectively, this means that we lost the entire late and night shifts today for the pump-probe beamtime and have some data of questionable quality from the early shift as well. |

|

105

|

14 May 2024, 09:56 |

Andreas Koch | XPD | Issue |

EPS issue for Transmissive imager SA1 (OTRC.2615.T9) w.r.t Operation eLog #96

The motor screen positions w.r.t. the EPS switches had been realigned (last Wednesday 8th May), tested today (14th May) and the EPS is again enabled for the different beam modes. All is back to normal. |

|

110

|

25 May 2024, 02:38 |

Rebecca Boll | SQS | Issue |

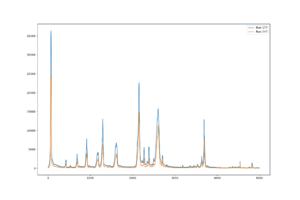

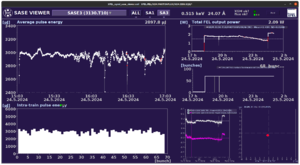

Today in the afternoon, SASE1 was tuned (a description of the activities in att 4). We now realize that this has in some form changed the SQS pulse parameters.

att 1 shows ion spectra recorded under nominally the same photon parameters before (run 177) and after (run 247)

it's also visible in the history of the SASE viewer that the behavior of the SASE3 pulse energy is different before and after 15:45, which is right when SASE1 was tuned. it clearly started fluctuating more. however, we don't think that the pulse energy fluctuations themselves are the reason for the large change in our data, we rather suspect a change in the pulse duration and/or the source point to cause this. however, we have no means to characterize this now after the fact.

it is particularly unfortunate that the tuning happened exactly BEFORE we started recording a set of reference spectra on the SASE3 spectrometer, which were supposed to serve as a calibration for the spectral corelation analysis to determine the group duration, as well as for a training data set to use the PES with machine learning as a virtual spectrometer for the large data set taken in the shifts before. |

| Attachment 1: Run_177_vs_247.png

|

|

| Attachment 2: Screenshot_from_2024-05-25_00-44-46.png

|

|

| Attachment 3: Screenshot_from_2024-05-25_01-03-29.png

|

|

| Attachment 4: Screenshot_from_2024-05-25_01-36-54.png

|

|

|

131

|

24 Jul 2024, 10:26 |

Harald Sinn | PRC | Issue |

In SASE2 undualtor cell 10 has issues. Suren K. will do a ZZ at 11:30 to fix it (if possible). Estimated duration: 1-3 hours.

Update 12:30: The problem is more severe than anticipated. Suren needs to get a replacement driver from the lab and then go back into the tunnel at about 14:00

In principle, the beam could be use dby HED until 14:00

Update 13:30: Beam was not put back, because energizing and de-energizing the magnets would take too much time. Whenever Suren is ready, he will go back into XTD1 and try to fix the problem.

Udate 17:30: Suren and Mikhail could do the repair and finish now th zz. A hardware piece (motor driver) had to be exchanged and it turned out that the Beckhoff software on it was outdated. To get the required feedback from Beckhoff took considerable time, but now everything looks good. |

|

132

|

24 Jul 2024, 11:35 |

Harald Sinn | PRC | Issue |

The control rack of the soft mono at SASE3 has some failure. Leandro from EEE will enter the XTD10 at 12:00.

Beam will be interupted for SAS1 and SASE3.

Estimate to fix it (if we are lucky) is one hour.

Udate 12:30: Problem is solved (fuse was broken, replaced, motors tested)

Update 13:30: Beam is still not back, because BKR has problems with their sequencer |

|

134

|

27 Jul 2024, 09:10 |

Harald Sinn | PRC | Issue |

SASE1: Solid attenuator att5 is in error. When it is tried to move it out, the arm lifts, but does not reach the final position before the software time-out. We increased the software time-out from 4 seconds to 7 seconds, which didn't help. We suspect that there is a mechanical problem that prevents the att5 actuator to reach the out position.

SPB states that it is ok to leave the attenuator arm in over the weekend, because they run mostly detector tests and don't require full intensity. |

|

142

|

10 Aug 2024, 07:19 |

Romain Letrun | SPB | Issue |

The SASE1 HIREX interlock is not working as expected.

At the position 'OUT' (step 2 in HIREX scene) and while none of the motors are moving, the interlock SA1_XTD9_HIREX/VDCTRL/HIREX_MOVING is triggered, which prevents the use of more than two pulses.

SA1_XTD9_HIREX/VDCTRL/HIREX_MOVING remains in INTERLOCKED state while moving HIREX in (expected behaviour) and finally goes to ON state when HIREX is inserted, thus releasing the MODES and MODEM interlocks and allowing normal beam operation. |

| Attachment 1: Screenshot_from_2024-08-10_07-17-38.png

|

|

|

159

|

08 Sep 2024, 20:30 |

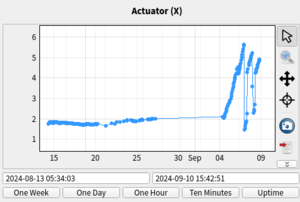

Peter Zalden | FXE | Issue |

We observe a continuous horizontal beam drift in SA1 after the M1 incident. It seems not to converge, so one can wonder where this will go....

Attached screenshot shows the M2 piezo actuator RY that keeps the beam at the same position on the M3 PBLM. These are about 130 m apart, The drift is 1V/12 hours. 0.01V corresponds to a beam motion by 35 um. So the drift velocity is 0.3 mm/hour. In the past days this had at least once lead to strong leakage around M2. But the two feedbacks in the FXE path are keeping the beam usable most of the time. |

| Attachment 1: 2024-09-08-194936_755701995_exflqr51474.png

|

|

|

161

|

09 Sep 2024, 23:19 |

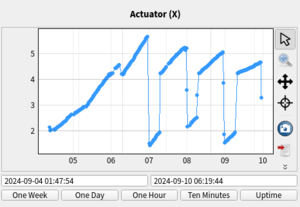

Peter Zalden | FXE | Issue |

Finally, the drift velocity of the pointing reduced approx a factor of 2 as compared to yesterday, see attached. Possibly it will still converge... |

| Attachment 1: 2024-09-09-231922_609331430_exflqr51474.png

|

|